Advanced Video Frame Prediction and Segmentation

A Dive into Masked Conditional Diffusion and U-Net

[Project Paper] [Presentation] [Code]

The field of video frame prediction and image segmentation has witnessed remarkable advancements, driven by innovative machine learning models. This blog post explores a cutting-edge project that combines Masked Conditional Video Diffusion (MCVD) for video frame prediction and the U-Net architecture for image segmentation. The study demonstrates how these models synergize to tackle the challenges of generating future video frames and analyzing them with precision.

Understanding the Problem

Video frame prediction involves forecasting future frames of a video sequence based on its preceding frames. This task is crucial for applications like autonomous driving, robotics, and video editing. However, accurately predicting high-quality frames remains a challenging endeavor due to the complexity of dynamic scenes. In this project, the goal was to predict the 22nd frame of a video sequence using only the first 11 frames. Following this, the predicted frames were segmented to identify various objects and regions within them, enabling detailed scene analysis.

Methodology

- Future Frame Generation with MCVD The Masked Conditional Video Diffusion (MCVD) model served as the backbone for future frame prediction. MCVD is a probabilistic denoising diffusion model that iteratively refines noisy inputs, conditioned on past frames, to generate coherent future frames. Its key features include:

- Learning a distribution of video data to predict plausible future states.

- Conditioning on past frames in a sliding window manner to ensure temporal consistency.

This approach is particularly effective in capturing the dynamics of complex scenes, making it ideal for tasks requiring an understanding of future states.

- Image Segmentation with U-Net Once the future frames were generated, the next step was to segment these frames using the U-Net architecture. U-Net is a convolutional neural network designed for image segmentation tasks. It is characterized by:

- A contracting path that captures contextual information.

- An expanding path that enables precise localization.

The U-Net model excels in segmenting complex scenes with high accuracy, even when trained on limited datasets. This makes it well-suited for analyzing synthetically generated video frames. Results

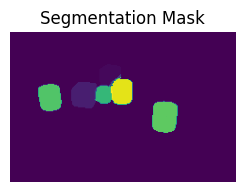

Segmentation Performance

The U-Net model achieved an impressive segmentation accuracy of over 96% on the validation set. This highlights its ability to effectively delineate and classify objects within video frames, providing valuable insights into scene composition.

Future Frame Prediction Performance

The MCVD model’s performance was evaluated using different sampling scenarios:

- With 100 DDPM samplings, it achieved a Jaccard index score of approximately 0.12.

- Increasing the sampling rate to 500 improved the score to 0.20.

- A reduced model size (two-thirds of the original capacity) resulted in a lower score of 0.06.

- The best results were obtained with 1,000 samplings, achieving a Jaccard index score of 0.36.

These results indicate that higher sampling rates enhance prediction accuracy by capturing finer details in dynamic scenes.

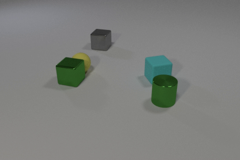

Samples

Key Insights and Limitations

Strengths

- The combination of MCVD and U-Net demonstrated robust performance in predicting future frames and segmenting them accurately.

- The generated images were sharp and free from blurring compared to outputs from other models.

Limitations

- The predicted images were relatively small in scale.

- Inference times were long when generating clearer images.

- Some objects were missed or incorrectly added during frame prediction, indicating room for improvement in model accuracy.

Conclusion

This project underscores the potential of combining advanced generative models like MCVD with powerful segmentation architectures like U-Net to tackle complex video processing tasks. While there are limitations to address—such as improving inference speed and object detection accuracy—the results highlight significant strides in predictive modeling and scene analysis. Future work could involve refining these models further by integrating mask generation during training or exploring alternative architectures to enhance performance. As machine learning continues to evolve, such innovations will undoubtedly pave the way for more sophisticated applications in video analysis and beyond.